SS depth reconstruction matrix

https://towardsdatascience.com/inverse-projection-transformation-c866ccedef1c

In this article, we will take a tour and understand the mathematics and concepts of performing back-projection from 2D pixels coordinate to 3D points. I will then go through a simple example in Python to show the projection in action. Code is available here. We will assume that a depth map is provided to perform the 3D reconstruction. The concepts that we will go through are camera calibration parameters, projective transformation using intrinsic and its inverse, coordinate transformation between frames.

Central Projection of Pinhole Camera Model

First and foremost, understanding the geometrical model of the camera projection serves as the core idea. What we are ultimately interested in is the depth, parameter Z. Here, we consider the simplest pinhole camera model with no skew or distortion factor.

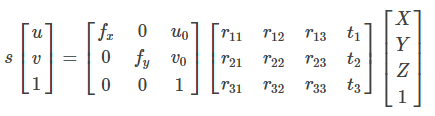

3D points are mapped to the image plane (u, v) = f(X, Y, Z). The complete mathematical model that describes this transformation can be written as p = K[R|t] * P.

where

- p is the projected point on the image plane

- K is the camera intrinsics matrix

- [R|t] is the extrinsic parameters describing the relative transformation of the point in the world frame to the camera frame

- P, [X, Y, Z, 1] represents the 3D point expressed in a predefined world coordinate system in Euclidean space

- Aspect ratio scaling, s: controls how pixels are scaled in the x and y direction as focal length changes

Intrinsic parameter matrix

The matrix K is responsible for projecting 3D points to the image plane. To do that, the following quantities must be defined as

- Focal length (fx, fy): measure the position of the image plane wrt to the camera centre.

- Principal point (u0, v0): The optical centre of the image plane

- Skew factor: The misalignment from a square pixel if the image plane axes are not perpendicular. In our example, this is set to zero.

The most common way of solving all the parameters is using the checkerboard method. Where several 2D-3D correspondences are obtained through matching and solving the unknown parameters by means of PnP, Direct Linear Transform or RANSAC to improve robustness.

With all the unknowns determine, we can finally proceed to recover the 3D points (X, Y, Z) by applying the inverse.

Backprojection

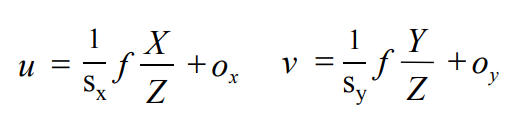

Consider the equation in Fig 4. Suppose (X, Y, Z, 1) is in the camera coordinate frame. i.e. we do not need to consider the extrinsic matrix [R|t]. Expanding the equation would give as

The 3D points can be recovered with Z given by the depth map and solving for X and Y. We can then further transform the points back to the world frame if needed.

Inverse projection example

Let's go through a simple example to digest the concepts. We will use the RGB and depth image as shown in figure 1. Pictures are acquired from a camera mounted on a car in a simulator, CARLA. The depth map is stored as float32 and encodes up to a maximum of 1000m for depth values at infinity.

Intrinsic Parameters from Field of View

Instead of determining the intrinsic parameters using checkerboard, one can calculate the focal lengths and optical centre for the pinhole camera model. The information needed is the imaging sensor height and width in pixels and the effective field of view in the vertical and horizontal direction. Camera manufacturer usually provides those. In our example, we will use +-45 degrees both in the vertical and horizontal direction. We will set the scale factor to 1.

Referring to fig 3, the focal lengths (fx, fy) and the principal point (u0, v0) can be determined using simple trigonometry. I leave it up to you to derive it as an exercise or you can look it up in the code!

Now, we can compute the inverse as follows

- Obtain the intrinsic camera parameters, K

- Find the inverse of K

- Apply equation in fig 5 with Z as depth from a depth map.

# Using Linear Algebra

cam_coords = K_inv @ pixel_coords * depth.flatten()A slower but more intuitive way of writing step 3 is

cam_points = np.zeros((img_h * img_w, 3))

i = 0

# Loop through each pixel in the image

for v in range(height):

for u in range(width):

# Apply equation in fig 5

x = (u - u0) * depth[v, u] / fx

y = (v - v0) * depth[v, u] / fy

z = depth[v, u]

cam_points[i] = (x, y, z)

i += 1You will get the same results!

Conclusion

There we have it, I have gone through the basic concepts required to do a back-projection.

Back projecting to 3D forms the basis of 3D scene reconstruction via Structure form Motion where several images are captured from a moving camera, along with its depth known or computed. Thereafter, matching and stitching together to get a complete understanding of the scene structure.

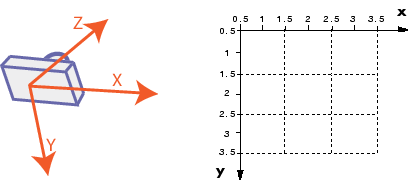

Orthographic Projection: Top View (Optional)

With the points represented in 3D, one interesting application is to project it to a top-down view of the scene. This is usually a useful representation for mobile robots as the distances between obstacles are preserved. Furthermore, it is easy to interpret and utilize to perform path planning and navigation task. For this, we need to know the coordinate system in which the points references.

We will use the right-hand coordinate system as defined below

For this simple example, which plane do you think the points should be projected?

If your guess is on the plane y= 0, you are right as y represent height as defined by the camera coordinate system. We simply collapse the y component in the projection matrix.

Looking at the figure below, you can easily measure the separation distances between all vehicles and objects.

Reference

[1] Hirschmuller, H. (2005). Accurate and Efficient Stereo Processing by Semi Global Matching and Mutual Information. CVPR

[2] Tinghui Zhou, Matthew Brown, Noah Snavely, and David Lowe. Unsupervised learning of depth and ego-motion from video. In CVPR, 2017

[3] Clement Godard, Oisin Mac Aodha, and Gabriel J Brostow. Unsupervised monocular depth estimation with left-right consistency. In CVPR, 2017.

댓글

댓글 쓰기